Introduction: The Power of Efficient Caching

LRU Cache in Go is an essential concept in the world of software development, where data access speed often determines the success or failure of an application. It’s a strategy that allows us to store frequently accessed data in a quickly retrievable location, reducing the need to repeatedly fetch data from slower sources. This concept underlies the responsiveness of web applications, the efficiency of database systems, and the optimization of resource-heavy operations.

Efficient caching mechanisms are like a well-organized library. They ensure that the most commonly used books are readily available on the front shelves, making it easy for readers to access them. Caches save time and resources by providing quick access to frequently needed data, reducing the load on the primary data source.

In the world of caching, the Least Recently Used (LRU) cache stands out as a simple yet powerful solution. LRU cache is designed to keep track of the most recently accessed items and discard the least recently used ones. It’s like a bookshelf that automatically shuffles books based on readers’ preferences.

In this comprehensive guide, we will delve into the world of caching, understand why efficient caching mechanisms are essential, and explore how the LRU cache plays a pivotal role in optimizing data access. Whether you’re a web developer, database administrator, or software architect, understanding LRU caching is crucial for building high-performance and responsive applications. Let’s embark on a journey to master the art of efficient data caching with LRU at its core.

Understanding Caching: The Backbone of Performance

Caching is the backbone of performance optimization in software development. It’s the practice of storing frequently accessed data in a fast-access location, ensuring that data is readily available when needed. Caching is essential for various reasons, including speed, resource optimization, and overall system efficiency.

- Speed and Responsiveness: Caching significantly enhances the speed and responsiveness of applications. By storing data in a cache, the need to repeatedly fetch the same data from a slower source, such as a database or a remote server, is greatly reduced. This leads to faster response times, smoother user experiences, and improved application performance.

- Resource Efficiency: Caching is a resource-efficient strategy. It reduces the load on primary data sources, which are often resource-intensive, by minimizing the number of requests to these sources. This not only conserves resources but also prevents potential bottlenecks.

- Redundancy Elimination: Caching helps eliminate redundancy in data retrieval. When multiple users request the same data simultaneously, the cache can serve the data to all of them without the need to fetch it multiple times from the primary source.

Types of Caching Mechanisms:

There are various types of caching mechanisms, each catering to specific use cases:

- In-Memory Caches: These caches store data in memory, allowing for lightning-fast access. In-memory caches are ideal for frequently accessed data that needs to be readily available.

- Disk-Based Caches: Disk-based caches, as the name suggests, store data on disk. While they may not be as fast as in-memory caches, they are suitable for larger datasets that cannot fit entirely in memory.

- Browser Caches: Web browsers utilize caching to store static assets such as images, scripts, and stylesheets. This reduces the need to re-download these assets when visiting the same website.

Cache Eviction and Replacement Strategies:

Caches have limited storage capacity, and it’s crucial to determine which data to retain and which to evict when the cache is full. Cache eviction and replacement strategies play a key role in this process. Common strategies include:

- Least Recently Used (LRU): The LRU strategy removes the least recently accessed items from the cache when it’s full. This strategy is effective when older data is less likely to be needed.

- Most Recently Used (MRU): MRU removes the most recently accessed items when the cache is full. It prioritizes keeping the latest data.

- First-In-First-Out (FIFO): FIFO removes the items based on their order of entry into the cache. The first item added is the first to be removed.

- Least Frequently Used (LFU): LFU removes items based on how often they are accessed. Less frequently accessed items are removed.

In software development, choosing the right caching mechanism and eviction strategy is crucial for optimizing performance and efficiency. The LRU cache, as one of the eviction strategies, plays a significant role in ensuring that the most relevant data remains readily available for use. In the following sections, we’ll delve deeper into LRU caching and its implementation in Go.

The LRU Cache Concept: Efficiency Through Least Recently Used

The Least Recently Used (LRU) cache is a widely used caching mechanism designed to maintain the most relevant and frequently accessed data in a cache while discarding less important or outdated data. At its core, the LRU cache adheres to the “Least Recently Used” principle, which dictates that when the cache reaches its maximum capacity, the least recently accessed item should be removed to make space for new data. This simple yet effective concept is pivotal in optimizing data retrieval and resource utilization.

Operating on the “Least Recently Used” Principle:

The LRU cache operates based on the principle that data that has been accessed less recently is less likely to be needed shortly. When the cache is full and a new item needs to be stored, the LRU cache identifies the item that has not been accessed for the longest time and evicts it from the cache to create space for the new data. This eviction process ensures that the cache always contains the most recently accessed and relevant items, maximizing cache efficiency.

To illustrate how the LRU cache works, consider a scenario where a web server frequently receives requests for the same web pages. The server can use an LRU cache to store recently requested web pages.

When a new request comes in, the LRU cache checks if the requested page is in the cache. If it is, the page is served directly from the cache, resulting in a significantly faster response time. If the page is not in the cache, the server fetches it from the database, stores it in the cache, and serves it to the client. As the cache reaches its capacity, the LRU cache identifies the least recently accessed web page and removes it to make space for newer pages.

Consider another example in a database query optimization context. When a database query is executed multiple times, the LRU cache can store the query result. Subsequent identical queries can be served from the cache, reducing the load on the database and improving query response times.

Examples of LRU Cache Operation:

- Web Browser Caching: Web browsers employ LRU caching to store frequently accessed assets like images, scripts, and stylesheets. When you revisit a website, the browser checks the cache for these assets and serves them locally instead of downloading them again.

- File System Caching: Operating systems often use LRU caching to store frequently accessed files in memory. This improves file access speeds and overall system responsiveness.

- Database Query Caching: In database systems, LRU caching can store the results of frequently executed queries, ensuring that subsequent identical queries are answered from the cache.

The LRU cache concept is elegant in its simplicity and yet powerful in its ability to optimize data retrieval and access times. By prioritizing the retention of recently accessed data, it minimizes the need for repetitive and time-consuming data fetches from slower sources, making it an indispensable tool in the world of software development. In the next sections, we’ll explore how to implement an LRU cache in the Go programming language, leveraging the “Least Recently Used” principle to enhance application performance.

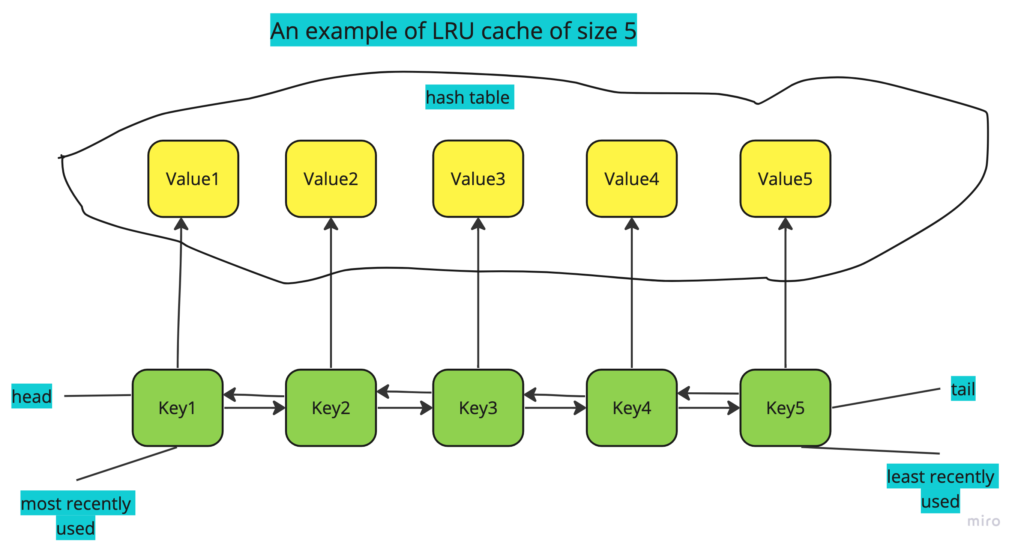

Algorithm for LRU Cache

1. Initialize the LRU cache with a specified capacity.

2. Create a hash map (dictionary) to store key-value pairs.

- Key: Cache key

- Value: Reference to the corresponding node in the linked list.

3. Create a doubly linked list to maintain the order of items in the cache.

- Nodes represent key-value pairs.

- The most recently used item is at the front (head) of the list.

- The least recently used item is at the end (tail) of the list.

4. When performing a GET operation:

a. Check if the key exists in the hash map:

- If it exists, retrieve the corresponding node.

- Move the node to the front of the linked list (indicating it's the most recently used).

- Return the value associated with the key.

- If it doesn't exist, return -1 (indicating a cache miss).

5. When performing a PUT operation:

a. Check if the key exists in the hash map:

- If it exists, update the value associated with the key.

- Move the corresponding node to the front of the linked list.

b. If the key doesn't exist:

- Create a new node with the key-value pair.

- Add the new node to the front of the linked list.

- Insert a reference to the node in the hash map.

c. If the cache size exceeds the capacity:

- Remove the node at the end of the linked list (indicating the least recently used item).

- Remove the corresponding entry from the hash map.

6. Cache eviction ensures that the cache size does not exceed the specified capacity. The least recently used item is removed to make space for new items.

This algorithm ensures that the most recently accessed items are kept at the front of the linked list, while the least recently used items are at the end. When the cache is full, the eviction process maintains the cache's capacity by removing the least recently used item.

Note that the exact implementation may vary depending on the programming language and data structures used. The provided algorithm serves as a high-level guide for designing an LRU cache.

Implementing an LRU Cache: A Walkthrough in Go

To implement an LRU cache, we need to choose appropriate data structures and define the necessary operations for managing the cache. In Go, we typically use a combination of a linked list and a hash map to build a basic LRU cache. Let’s walk through the steps to create a basic LRU cache in Go, and discuss the trade-offs and considerations involved in the implementation.

Note: Please install Go first.

Data Structures in LRU Cache Implementation:

- Linked List: A doubly linked list is used to maintain the order of items in the cache. The linked list represents the access history of items, with the most recently accessed item at the front of the list and the least recently accessed item at the end. This order makes it easy to identify and remove the least recently used item when the cache is full.

- Hash Map: A hash map (or hash table) is used to provide fast and efficient access to cache items by their keys. Each node in the linked list contains a key-value pair, and the hash map stores references to these nodes. This allows for quick lookups and updates when accessing items in the cache.

Steps to Create a Basic LRU Cache in Go:

- Initialize Data Structures: Create a struct to represent the cache, which contains a linked list, a hash map, and variables to track the cache size and capacity.

- Get Operation: When a “get” operation is performed on the cache, the hash map is used to look up the key. If the key exists, the corresponding node is moved to the front of the linked list to indicate that it’s the most recently used item. The value is returned.

- Put Operation: When a “put” operation is performed to add an item to the cache, the hash map is checked. If the key already exists, the node is updated with the new value and moved to the front of the linked list. If the key doesn’t exist, a new node is created, added to the front of the linked list, and inserted into the hash map. If the cache size exceeds the capacity, the least recently used item (i.e., the item at the end of the linked list) is removed from both the linked list and the hash map.

- Eviction: When evicting items, the cache ensures that the least recently used item is removed to make space for new items. This ensures that the cache remains within its defined capacity.

Trade-Offs and Considerations in Implementation:

- Complexity: Implementing an LRU cache requires managing both a linked list and a hash map, which can add complexity to the code.

- Performance: The choice of data structures and algorithms can impact the cache’s performance. A well-designed cache will have fast “get” and “put” operations.

- Concurrency: Considerations for thread safety and synchronization are crucial in multi-threaded environments to prevent race conditions and data corruption.

- Capacity: The cache’s capacity should be carefully chosen to balance memory usage and cache effectiveness.

- Hit Rate: Monitoring and optimizing the cache’s hit rate is essential for ensuring that frequently accessed items remain in the cache.

Implementing an LRU cache in Go requires thoughtful design and careful consideration of these factors. By using the “Least Recently Used” principle, an LRU cache can efficiently manage data access and enhance the performance of various applications, from web servers to databases.

Let us walk through a sample implementation

package main

import (

"container/list"

"fmt"

)

// LRUCache represents the LRU cache structure.

type LRUCache struct {

capacity int

cache map[int]*list.Element

list *list.List

}

// Entry represents a key-value pair in the cache.

type Entry struct {

key int

value int

}

// Constructor initializes the LRU cache with a specified capacity.

func Constructor(capacity int) LRUCache {

return LRUCache{

capacity: capacity,

cache: make(map[int]*list.Element),

list: list.New(),

}

}

// Get retrieves the value associated with a key from the cache.

func (c *LRUCache) Get(key int) int {

if elem, ok := c.cache[key]; ok {

c.list.MoveToFront(elem)

return elem.Value.(*Entry).value

}

return -1

}

// Put adds or updates a key-value pair in the cache.

func (c *LRUCache) Put(key int, value int) {

if elem, ok := c.cache[key]; ok {

c.list.MoveToFront(elem)

elem.Value.(*Entry).value = value

} else {

if len(c.cache) >= c.capacity {

// Evict the least recently used item

delete(c.cache, c.list.Back().Value.(*Entry).key)

c.list.Remove(c.list.Back())

}

entry := &Entry{key: key, value: value}

newElem := c.list.PushFront(entry)

c.cache[key] = newElem

}

}

func main() {

// Create an LRU cache with a capacity of 2.

cache := Constructor(2)

// Put key-value pairs in the cache.

cache.Put(1, 1)

cache.Put(2, 2)

// Retrieve and print values from the cache.

fmt.Println(cache.Get(1)) // Output: 1

fmt.Println(cache.Get(2)) // Output: 2

// Add a new key-value pair, which triggers cache eviction.

cache.Put(3, 3)

// The key 2 is evicted since it's the least recently used.

fmt.Println(cache.Get(2)) // Output: -1

}

This code defines an LRUCache struct with Get and Put methods to retrieve and store key-value pairs in the cache. When the cache reaches its capacity, the least recently used item is evicted to make space for new entries. You can create an instance of the LRUCache and perform cache operations as demonstrated in the main function.

Optimizing LRU Cache in Go: Enhancing Performance and Efficiency

Optimizing an LRU (Least Recently Used) cache in Go involves considering various factors to ensure efficient and high-performance caching. Here are some techniques to optimize LRU cache performance in Go:

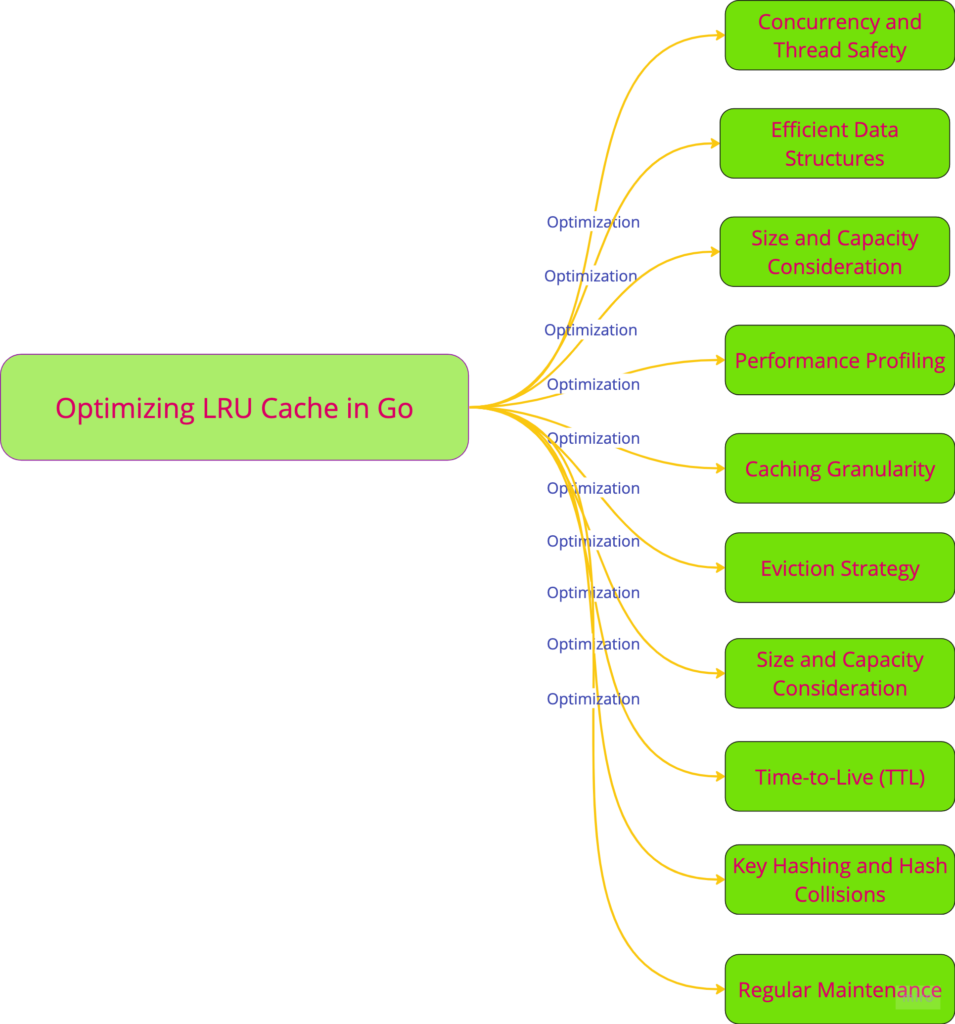

1. Concurrency and Thread Safety:

- In multi-threaded or concurrent environments, it’s crucial to ensure thread safety to prevent race conditions and data corruption. Go’s built-in mechanisms like channels, mutexes, and goroutines can be employed to synchronize access to the LRU cache. Implementing a thread-safe LRU cache ensures that data remains consistent and reliable in concurrent scenarios.

2. Efficient Data Structures:

- Choose efficient data structures to implement the LRU cache. Go’s standard library offers a doubly linked list and a hash map, which are well-suited for this purpose. Be mindful of the choice of data structures, as they impact the cache’s performance. Utilize built-in data structures to minimize memory overhead and improve cache operations.

3. Size and Capacity Consideration:

- Carefully determine the cache’s size and capacity. Over-sizing the LRU cache can lead to inefficient memory usage, while under-sizing it may result in frequent cache misses. Regularly monitor cache usage to strike the right balance between capacity and performance.

4. Performance Profiling:

- Employ Go’s profiling tools to analyze cache performance. Profiling can identify bottlenecks, high-usage areas, and areas where optimization is needed. Profiling data can guide adjustments and improvements to cache operations.

5. Caching Granularity:

- Consider the granularity of caching. Depending on the use case, you may cache entire objects, database query results, or individual data elements. Fine-tuning the level of caching granularity can impact performance and cache efficiency.

6. Eviction Strategy:

- Optimize the eviction strategy to efficiently remove least recently used items. Utilize Go’s features to implement an eviction strategy that minimizes cache churn and maximizes the retention of frequently accessed data.

7. Time-to-Live (TTL):

- Implement a time-to-live (TTL) mechanism for cache entries, allowing items to expire after a certain time period. This ensures that stale or outdated data is automatically removed, freeing up cache space for fresh data.

8. Key Hashing and Hash Collisions:

- Handle key hashing and hash collisions efficiently. Go’s hash map implementation takes care of many hash collision scenarios, but it’s important to be aware of potential performance issues related to hash collisions.

9. Regular Maintenance:

- Implement periodic cache maintenance routines to clean up expired or rarely used items. This keeps the cache tidy and ensures optimal performance.

Optimizing an LRU cache in Go involves a balance between memory usage, performance, and thread safety. Leveraging Go’s built-in features and tools, developers can fine-tune their LRU cache implementations to meet the specific needs of their applications, ensuring efficient and high-performance caching. Regular performance profiling and monitoring are key practices to maintain an optimized LRU cache over time.

Testing and Debugging LRU Cache Implementations: Ensuring Reliability

Testing and debugging LRU (Least Recently Used) cache implementations are essential to ensure their reliability and performance. Here are key approaches to validate and optimize your LRU cache in Go:

1. Unit Testing:

- Develop comprehensive unit tests to evaluate individual components of the LRU cache. Test key operations like “get” and “put” to verify correctness. Ensure that the cache handles edge cases, such as cache hits, cache misses, and cache eviction, correctly.

2. Benchmarking:

- Utilize Go’s benchmarking framework to measure the cache’s performance under various workloads. Benchmark tests help identify bottlenecks and inefficiencies in cache operations. This data is crucial for fine-tuning the cache’s performance.

3. Profiling:

- Use Go’s profiling tools to identify performance hotspots, memory usage, and resource consumption. Profiling helps pinpoint areas of improvement, such as optimizing cache eviction, reducing memory overhead, and streamlining data access.

4. Concurrent Testing:

- Implement concurrent testing to ensure thread safety in multi-threaded environments. Verify that the cache behaves correctly when accessed by multiple goroutines concurrently. Concurrent testing is essential to prevent race conditions and data corruption.

5. Integration Testing:

- Conduct integration testing with real-world scenarios and data. Simulate the cache’s interaction with your application to ensure that it works seamlessly in the intended context.

6. Error Handling and Edge Cases:

- Test error handling and edge cases rigorously. Validate the cache’s behavior when handling unexpected input, out-of-bounds access, or edge cases like cache overflows.

7. Debugging Tools:

- Employ Go’s debugging tools and techniques to trace and resolve issues. The Go debugger, printf-style debugging, and log statements can help identify and fix runtime problems.

8. Load Testing:

- Perform load testing to assess how the cache handles high levels of traffic and data access. Load testing can reveal performance bottlenecks and scalability issues.

9. Continuous Monitoring:

- Implement continuous monitoring and logging to track cache behavior in production. Monitoring helps identify issues that may only arise under real-world conditions.

By combining these testing and debugging approaches, developers can ensure that their LRU cache implementations are reliable, efficient, and capable of delivering optimal performance in various use cases. This thorough testing and debugging process contributes to the stability and effectiveness of LRU caches in real-world applications.

Useful Go Libraries and Packages for Caching

Go offers a range of libraries and packages for caching that simplify the implementation of efficient caching strategies in your applications. Here are some popular Go libraries and packages for caching:

1. “groupcache” by Google:

- Features: Developed by Google, “groupcache” is designed for distributed caching and efficiently caches data across multiple nodes. It offers support for distributed cache synchronization and cache coherency.

- Use Cases: “groupcache” is ideal for large-scale applications that require distributed caching to ensure high availability and scalability.

2. “gocache” by Orcaman:

- Features: “gocache” is a versatile in-memory caching library for Go, offering multiple eviction policies, time-to-live (TTL) support, and efficient cache management.

- Use Cases: It’s suitable for a wide range of applications, from web servers to microservices, where in-memory caching is required for performance optimization.

3. “ristretto” by Dgraph Labs:

- Features: “ristretto” is a fast and efficient in-memory cache library that provides automatic and adaptive cache management. It optimizes memory usage and supports a range of cache policies.

- Use Cases: Use “ristretto” in applications where memory efficiency is crucial, such as high-throughput web services and database systems.

4. “bigcache” by devsisters:

- Features: “bigcache” is an in-memory cache designed for low-latency, high-throughput applications. It leverages sharding and memory-mapped files for performance optimization.

- Use Cases: It’s suitable for scenarios where ultra-fast data access with minimal latency is required, such as real-time applications and high-frequency trading systems.

5. “gomemcache” by bradfitz/gomemcache:

- Features: “gomemcache” is a Go client library for interacting with Memcached servers. It provides a convenient and efficient way to use Memcached for distributed caching.

- Use Cases: When integrating with Memcached for distributed caching, “gomemcache” simplifies client interactions.

6. “go-redis” by go-redis/redis:

- Features: “go-redis” is a comprehensive client library for Redis, a popular in-memory data store. It offers a wide range of features for efficient Redis caching and data manipulation.

- Use Cases: When working with Redis for caching and data storage, “go-redis” simplifies the process and provides extensive functionality.

Selecting the right caching library or package depends on your specific application requirements. Consider factors such as scalability, memory efficiency, performance, and the level of control you need when choosing a caching solution for your Go projects.

Case Study: Implementing LRU Cache in a Go Web Application

Imagine a high-traffic e-commerce platform built with Go, facing the challenge of delivering real-time product recommendations to millions of users. To optimize this process, the development team decided to implement an LRU (Least Recently Used) cache to store frequently accessed product data.

Challenges:

- High Traffic: The platform experienced a substantial volume of user requests, making real-time recommendations computationally expensive.

- Database Load: Frequent database queries for product information resulted in significant database load and increased latency.

Solution:

The development team decided to implement an LRU cache in Go to alleviate these challenges. They chose an in-memory caching approach using a popular Go caching library.

Benefits and Performance Improvements:

- Reduced Database Load: By storing frequently accessed product data in the cache, the application significantly reduced the number of database queries. This led to a substantial reduction in database load, ensuring efficient use of database resources.

- Improved Response Times: Real-time product recommendations became significantly faster, as cached data could be retrieved directly from memory. Users experienced quicker page loads and received recommendations in real time, enhancing their overall experience.

- Scalability: The caching solution was designed to be scalable, allowing the platform to accommodate increasing user loads without compromising performance. The cache dynamically adapted to growing data volumes.

- Optimized Resource Utilization: The in-memory caching approach optimized resource utilization. Frequently requested product data was retained in memory, reducing the need for repetitive database queries.

- Cost Savings: With reduced database load, the platform experienced cost savings in terms of database infrastructure and maintenance.

By implementing an LRU cache in their Go web application, the e-commerce platform achieved substantial performance improvements, reduced database load, and delivered a more responsive user experience. This case study underscores the effectiveness of LRU caching in optimizing real-time data retrieval and enhancing the scalability and cost-efficiency of web applications.

LRU Cache Use Cases: Enhancing Performance in Real-World Scenarios

LRU (Least Recently Used) caching is a versatile and highly effective technique used in various real-world scenarios to optimize performance and enhance the user experience. Let’s explore some of the most common use cases where LRU caching proves invaluable:

1. Web Server Caching:

- Web servers utilize LRU cache to store frequently accessed web pages, assets (such as images and stylesheets), and content. This reduces the response time for subsequent requests, providing a faster and more responsive user experience. Visitors to a website benefit from quicker page loads, and the server experiences reduced load, making it an ideal solution for high-traffic sites.

2. Database Query Caching:

- In database systems, LRU caching is employed to store the results of frequently executed queries. Subsequent identical queries can be answered from the cache, eliminating the need to repeatedly access the database. This significantly reduces database load, lowers query response times, and enhances the overall efficiency of database-driven applications.

3. Image Processing:

- Image processing applications, such as photo editing software or image compression tools, use LRU caching to store processed images, thumbnails, and frequently used filters. This allows users to work with images in real time, as the cached results are readily available. It’s especially valuable in scenarios where images undergo multiple transformations or manipulations.

4. File System Caching:

- Operating systems employ LRU cache to store frequently accessed files in memory. By keeping recently accessed files readily available, the operating system can provide faster file access times, resulting in improved system responsiveness. This caching strategy benefits both general computer usage and specialized applications.

5. API Response Caching:

- In web applications that interact with external APIs, LRU caching is employed to store API responses. Frequently requested data from third-party services is kept in the cache, reducing the need for redundant API calls. This not only speeds up application responses but also helps manage API rate limits and enhances reliability.

6. Content Delivery Networks (CDNs):

- CDNs use LRU caching to store content, such as images, videos, and website assets, in edge servers located closer to end-users. This minimizes the latency associated with content retrieval, ensuring rapid content delivery and a seamless browsing experience.

7. Database Row-Level Caching:

- In database-driven applications, LRU cache is employed at a more granular level to cache individual database rows or objects. This fine-grained caching is particularly useful when certain database rows are frequently accessed, providing a performance boost in data retrieval.

LRU caching is a valuable tool in the arsenal of software developers and system administrators. By intelligently managing frequently accessed data, LRU caching significantly enhances application performance, reduces resource consumption, and ensures a smoother and more responsive user experience in a wide range of real-world scenarios.

Challenges and Pitfalls in LRU Cache Implementation

While LRU (Least Recently Used) caches offer numerous advantages, they also come with their set of challenges and potential pitfalls. Here are some common issues and strategies to address them:

1. Cache Size and Capacity:

- Challenge: Determining the optimal cache size and capacity can be tricky. Overestimating or underestimating these parameters can lead to inefficiency.

- Strategy: Regularly monitor cache usage, adjust the size, and employ cache eviction strategies to manage capacity effectively.

2. Cache Misses:

- Challenge: Cache misses can occur when an item is not found in the cache. Frequent cache misses can degrade performance.

- Strategy: Implement strategies to handle cache misses, such as fetching the missing data from the data source, updating the cache, and considering prefetching or loading cache items on demand.

3. Cold Starts:

- Challenge: A cold start occurs when the cache is initially empty, leading to performance delays until the cache populates.

- Strategy: Implement warm-up routines or populate the cache with frequently accessed data during application startup to minimize cold start impact.

4. Cache Consistency:

- Challenge: Maintaining cache consistency when the underlying data changes can be complex. Stale or outdated data in the cache can lead to incorrect results.

- Strategy: Implement cache invalidation or update mechanisms to ensure that cached data remains consistent with the source data. Consider using a time-to-live (TTL) approach to automatically expire cache entries.

5. Thread Safety:

- Challenge: In multi-threaded environments, ensuring cache access is thread-safe is essential to prevent race conditions.

- Strategy: Implement synchronization mechanisms such as mutexes or channels to ensure thread safety and data integrity.

6. Eviction Policies:

- Challenge: Selecting the appropriate eviction policy (e.g., LRU, LFU, or FIFO) is crucial. The wrong policy can impact cache efficiency.

- Strategy: Choose the eviction policy that best suits your specific use case and regularly evaluate its effectiveness.

7. Memory Management:

- Challenge: Managing memory allocation for the cache can be a concern, especially in low-memory environments.

- Strategy: Optimize memory usage by employing efficient data structures and paying attention to the memory footprint of cached items.

Addressing these challenges and pitfalls requires careful consideration during the design and implementation of LRU caches. Tailoring cache strategies to the specific needs of your application and continuous monitoring and fine-tuning are essential for maintaining efficient and reliable caching solutions.

Future Trends in Caching: Meeting the Demands of Modern Computing

Caching is evolving to meet the demands of modern computing and changing architectural paradigms. Several emerging trends and technologies are shaping the future of caching:

1. Edge Caching: With the growth of edge computing, caching is moving closer to end-users. Edge caches, deployed at the network edge, are used to reduce latency and deliver content to users more rapidly. This trend is vital for applications like IoT and real-time content delivery.

2. Machine Learning-Powered Caching: Machine learning algorithms are being used to predict which data should be cached and when to evict it. By analyzing access patterns, machine learning can optimize caching strategies, making caches smarter and more efficient.

3. Distributed Caching: Caching is becoming more distributed, with cache data spread across multiple nodes or locations. Distributed caching ensures high availability, scalability, and fault tolerance in modern applications.

4. Containerization and Kubernetes: Containerized applications and orchestrators like Kubernetes are influencing caching. Caches can be containerized, making them more portable and easier to manage in containerized environments.

5. Cloud-Native Caching: Cloud-native architecture leverages microservices, serverless functions, and container orchestration in the cloud. Caching is evolving to support cloud-native applications by providing elastic and scalable caching solutions.

6. GraphQL and API Caching: As GraphQL gains popularity, API caching is becoming more complex. Caches need to support flexible query-based invalidation and caching for GraphQL schemas.

7. Serverless Caching: Serverless computing platforms like AWS Lambda and Azure Functions often use caching to reduce execution times. Serverless-specific caching solutions are emerging to optimize these environments.

8. Sustainability and Energy-Efficient Caching: Energy-efficient caching solutions are being developed to reduce the environmental impact of data centers. Caches are designed to minimize power consumption while providing high performance.

The future of caching is closely tied to the evolving landscape of computing and data management. As applications become more distributed and cloud-native, caching solutions will continue to adapt to meet the demands of modern, dynamic workloads. Leveraging emerging technologies and trends, caching will remain a critical component in delivering high-performance and responsive applications.

Conclusion: The Power of LRU Caching in Software Optimization

In the world of software optimization, caching plays a pivotal role in enhancing performance and responsiveness. Among the various caching strategies, the LRU (Least Recently Used) cache stands as a timeless and effective solution for maintaining frequently accessed data in a readily available state. This blog post has shed light on the significance of LRU caching and its many facets.

Key takeaways from this exploration of LRU caching include:

1. Performance Enhancement: LRU caching accelerates data retrieval by storing frequently accessed information in memory. This leads to faster response times, reduced latency, and a smoother user experience.

2. Memory Efficiency: LRU caches are designed to balance memory usage efficiently. They automatically manage data eviction, ensuring that the most relevant data remains accessible.

3. Scalability and Concurrency: Implementing LRU caching in multi-threaded and distributed environments can significantly improve scalability and concurrency, allowing applications to handle more users and data.

4. Real-World Applications: LRU caching finds applications in various scenarios, from web servers and databases to content delivery networks and recommendation engines.

5. Ongoing Optimization: Regular monitoring, profiling, and fine-tuning are essential for maintaining cache performance over time.

As software ecosystems continue to evolve, the importance of efficient caching strategies, including LRU caching, cannot be overstated. By embracing caching techniques and adapting them to modern computing paradigms, developers can ensure that their applications meet the ever-increasing demand for speed, efficiency, and responsiveness. In the dynamic landscape of software development, LRU caching remains a powerful tool for achieving these goals and optimizing software performance.